Formal Engineering Support for Field-Programmable Gate Arrays

With computing causing a substantial amount of carbon emissions nowadays, increasing the energy-efficiency of computing is of principal importance. However, the most energy-efficient computation technologies are unfortunately quite hard to develop for. Field-programmable gate arrays (FPGAs) fall into this category and are nowadays not only used in embedded systems, but also in data centers.

FPGAs are configured like hardware rather than programmed like software. This requires the developer to think in terms of clock cycles rather than computation steps in order to utilize them in an efficient way. As a consequence, developing for them is substantially more complicated, especially for software engineers. Hence, they are often ignored in favor of more energy-inefficient computing technologies.

While industry started to address this problem using high-level synthesis (HLS), where circuit descriptions are extracted from software-like program code, using HLS in an effective way requires a lot of experience and the resulting implementations often utilize more FPGA resources than manually made implementation for the same tasks. This is rooted in the problem that when describing a computation as software, a lot of the problem structure that is independent from a software representation is lost, and a HLS tool has to recover a crude version of it. At the same time, the gap is tightened from the other side by novel hardware description languages that increase developer efficiency. They do not, however, change the fact that resource utilization needs to be planned by hand in a cycle-accurate way when describing hardware, leaving the development difficult.

This observation calls for a fundamental change in the way FPGA-based implementations for everyday computation tasks (such as in computing centers) are built. The intricate details of mapping a task to hardware needs to be left to the computer, as it requires assigning FPGA resources to task parts. At the same time, the employed computation engine needs a task representation that supports solving such an assignment problem. This suggests a representation level in which the engineer only writes what is to be computed but now how, as the latter would prematurely fix a solution.

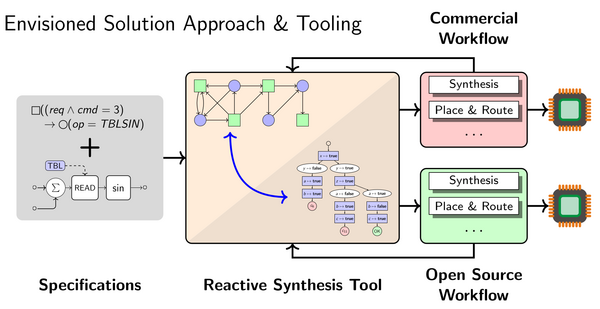

The VolkswagenStiftung Momentum endeavor addresses this challenge by marrying the concept of reactive synthesis with FPGAs as target domain. In reactive synthesis, implementations are built automatically from specifications in temporal logic. Reactive synthesis works well if the focus of the implementation is on the control flow, but integrating data into a synthesis process is difficult. The core insight used in this endeavor is that data does not have to be handled in general for making FPGA-based development easier – rather, it suffices to synthesize an orchestrator for the data-processing blocks describing the data processing task parts on an FPGA, for which a coarse task abstraction suffices. Synthesizing the coordination between different data processing blocks has the potential to even make FPGA-based implementations use fewer resources (and hence less costly) by routing data so that the same building blocks can be used for different purposes in different clock cycles. In this way, the results of the endeavor will not only help with energy-efficiency, but also make system implementations better.

The execution of this endeavor requires research on FPGAs to go hand-in-hand with research on reactive synthesis, which is traditionally located in the field of formal methods. A core difficulty fpr reactive synthesis in this context is the computation of a good implementation, and in the scope of this endeavour this mean to define this extraction process in a way suitable for FPGAs. Reactive synthesis is typically reduced to solving a game between to players, and extracting an implementation that used FPGA resources well then means to define what properties a strategy for one the players, which represents an implemention, should have. At the same time, game representations and game solving optimizations have to be researched that encode the information routing and coordination problems in FPGA-based implementations in a suitable way.

A couple of lead example FPGA applications have already been defined, which will allow to work on these questions in a systematic way and with demonstratable outcomes.

The developed approaches are expected to be relatively agnostic to the FPGA manufacturer. A Xilinx Alveo U50 card is already available to the research group as an example of a large FPGA. It is expected that some demonstrations will also run on low-cost FPGAs.

Volkswagenstiftung endeavors have the interesting aspect that the research group head to which they are awarded should not already work in the target area of research. In the scope of this concrete endeavor, the ACPS research group does not already have performed work on FPGAs yet. However, we are already active in the field of reactive synthesis.

![[Translate to English:] [Translate to English:]](/fileadmin/_processed_/2/b/csm__DSC5010_e2cbf13661.jpg)